🔮 #3 Top Forecaster: "Nothing Ever Happens"

Inside Peter Wildeford’s award-winning forecasting strategy

After our recent interview with Doomberg, The Oracle received a message from a reader who was eager to take the other side on a number of his predictions.

The message was from Peter Wildeford, who is the #3 most medaled forecaster on Metaculus, holding the rank of 3 of 17,645.

Like “Semi” who went from $286 to over a $1m bankroll on Polymarket, Peter’s main strategy is to exploit what he sees as a bias towards dramatic headlines. The Oracle spoke with Peter about how he developed his edge and his takes on a number of active polymarkets.

This interview has been edited for length. All answers are Peter’s own.

How did you get into forecasting?

I started on Metaculus around 2020, drawn in by COVID. It felt like an unprecedented event, and I wanted to understand how some people were able to see it coming while I couldn't. But I was pretty terrible that first year – I prefer people forget that year existed.

I was making classic beginner mistakes, like just drawing lines on graphs and assuming they'd continue forever. With COVID, I was underestimating the spread, expecting it to be contained like SARS. I generally have a "nothing ever happens" approach to forecasting, which is right most of the time but painfully wrong when things actually do happen.

What did you change to improve your accuracy?

The key lesson was humility: realizing there's a lot I don't know and learning when to bow out. I became much more choosy about what I take positions on, having more respect for when the market knows more than me versus when I actually have something to add.

I also learned the importance of teams. For the 2022 midterms, I worked with two other people who had expertise in demographics and candidate positions. Our theory was that demographics would matter a lot for predicting elections, especially following Roe v Wade. We thought candidate positions on abortion and how extreme versus moderate they were would change election outcomes in ways the polls weren't capturing.

We expected Democrats to do much better than conventional wisdom suggested, potentially even winning the House. They fell short of that but came much closer than the 538 model or election markets were predicting.

Tell me about your approach to building forecasting teams.

Over the years, I've assembled a Rolodex of experts across different topics. I watch people's track records: when they say something, do they end up being right? I know who I trust on different issues.

For example, when Israel attacked Iran, I know three people with good track records on Middle East issues. I talk to all of them and try to draw the through line. To be frank, I add very little of my own value in my forecasting. I just make out like a bandit by having these people I trust and drawing good averages across them.

These are largely people I've recruited through Twitter, though some are personal friends. I'm not getting any insider knowledge, it's all public information. But having access to their expertise and their past track record is pretty key to my alpha. I keep that list carefully guarded. Some people don't even know they're on it.

Can you give an example of how this works?

The US-Iran situation was really underrated in terms of the US’ ability to just bomb Iran and leave without major consequences. Everyone's minds were blown by this, but it's exactly what happened with Soleimani under the first Trump administration.

The nuclear arms experts and Iranian experts I knew with good track records were all in on the "Soleimani hypothesis," that we can just go in and go out and there's nothing Iran can do about it. The concept of escalation dominance means that basically any way Iran tries to escalate, we can win, and they have so much more to lose than we do.

You mentioned having a "nothing ever happens" approach. How does that translate to your predictions?

There's a well-measured and confirmed systematic pro-Yes bias in prediction markets and forecasting tournaments: if you take the community average across all questions for what percentage they think will be "Yes," it's always much higher than the percentage that actually turn out "Yes."

I think it's because when you pose a provocative question to someone, it prompts their brain to come up with scenarios for how it could happen. But there's no particular reason to think it will happen just because you're asking the question. The mere fact that a question is posed doesn't mean it's more likely.

What are some of your current big "No" takes?

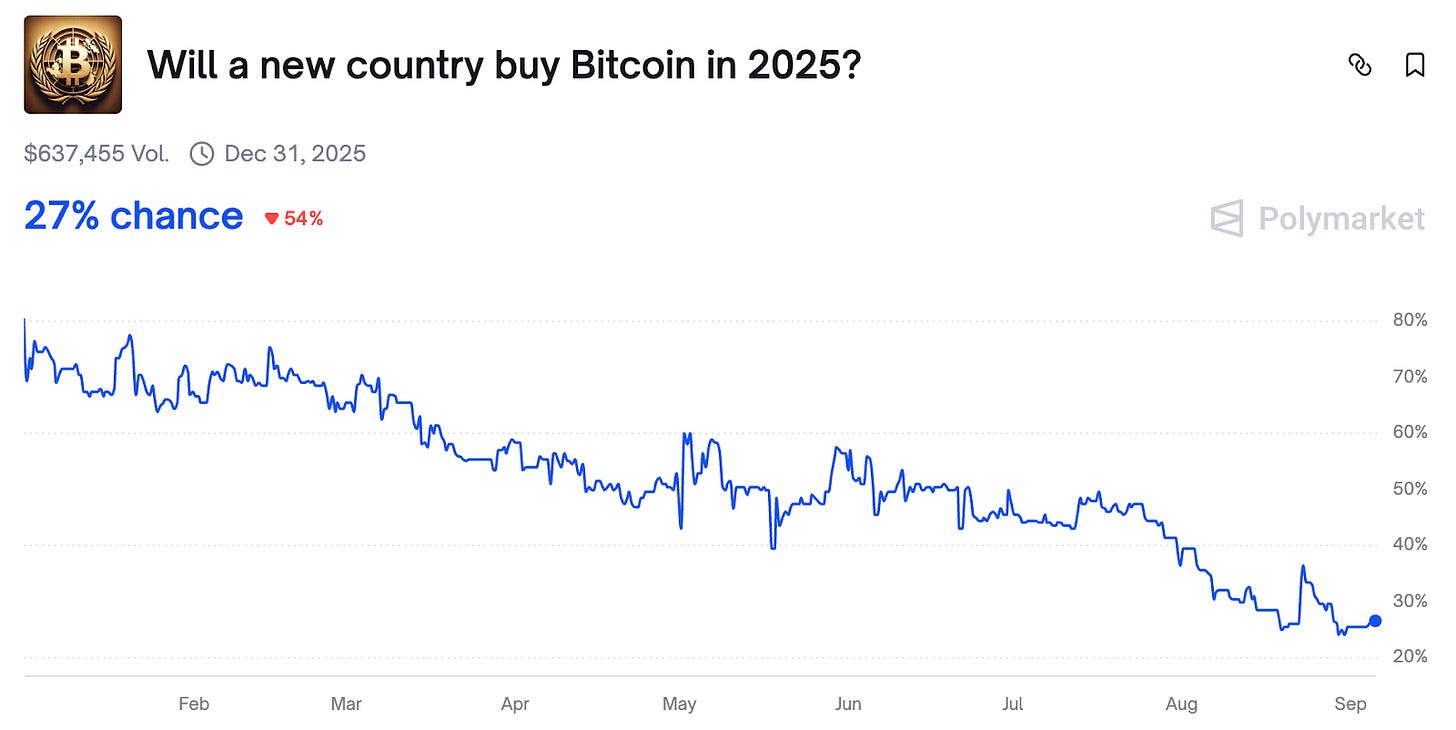

Right now I'm confident that no new country will buy Bitcoin this year. Trump crypto mania was pushing that way up beyond any plausibility. There's just really no rational incentive for countries to do this. Even El Salvador, which did buy Bitcoin, hasn't seen much benefit.

I'm also predicting "No" on several Time Person of the Year candidates. People seem overconfident about picks like "Artificial Intelligence" (🔮31%) but there are lots of AI options that aren't the generic concept, like Sam Altman or Jensen Huang or specific companies or products. Plus they picked Taylor Swift over AI in 2023, so I'm not convinced they'll go with AI now.

For Pope Leo XIV (🔮24%), Time usually only picks the Pope when they do something consequential in policy, not just because there's a new Pope. And for Donald Trump (🔮 8%), he already won twice, he won last year. Why would they pick him two years in a row? It's never been the same person consecutively.

Biggest misses?

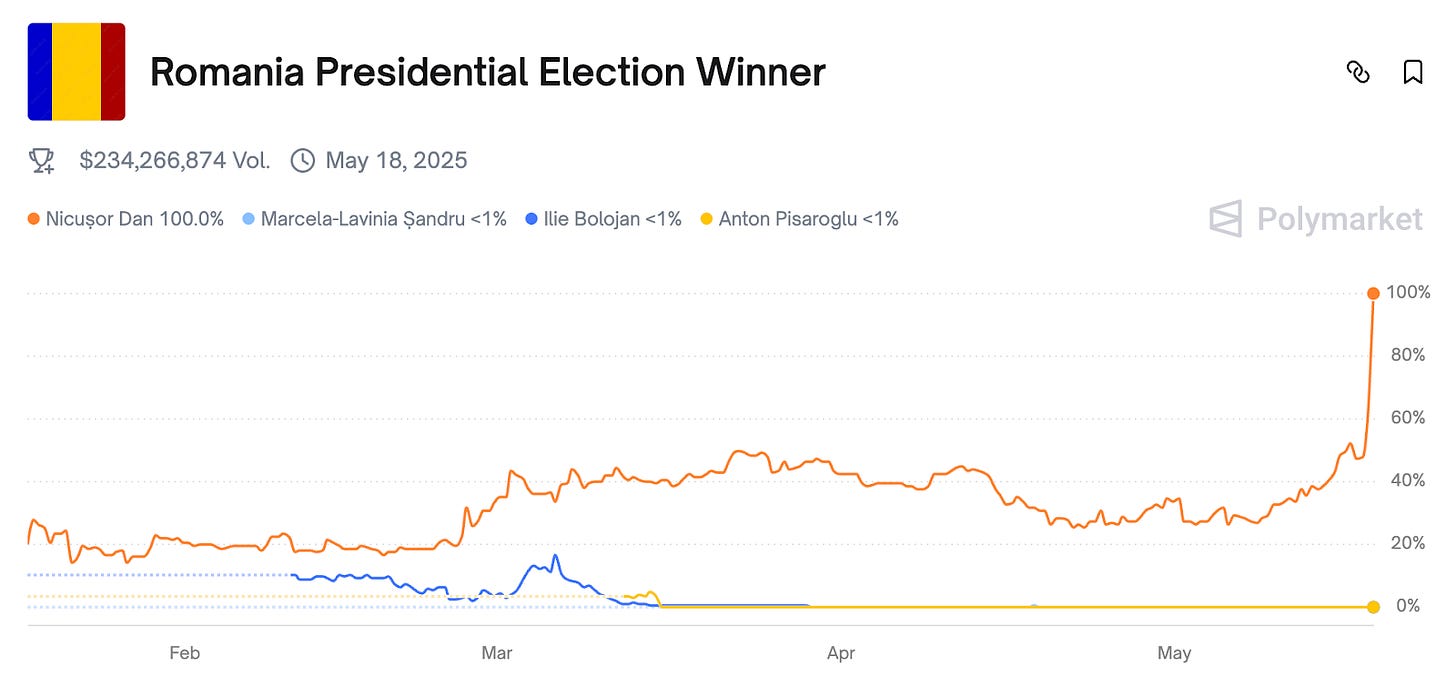

The Romanian presidential election was brutal - my single biggest miss ever. I tried to do a polls-based model, but polls don't work in every country. You have to be careful to only apply polling models to countries where polls actually work.

That election was just absolutely bonkers. George Simeon won huge in the first round after a previous election was annulled - I'm surprised more people don't talk about how insane it is that Romania just canceled an election. Then in the second round you had this huge dramatic surge in turnout and coalition changes that I totally didn't expect.

I assumed that no one had ever won a first round by that much and then failed to clinch the second round. I was very wrong.

You write a lot about AI progress. Do you use AI in your workflow?

I definitely use AI as a sanity-checking tool, like another person in my expert network. I paste rules into chat and ask what it thinks is likely. It can rapidly research things and surface perspectives I haven't considered.

But I haven't been impressed by having models state odds and copy pasting those forecasts. I think current AI products can't cut it for competitive forecasting, though I'm bullish on AI in the long run so I'll probably be out-competed in a few years.

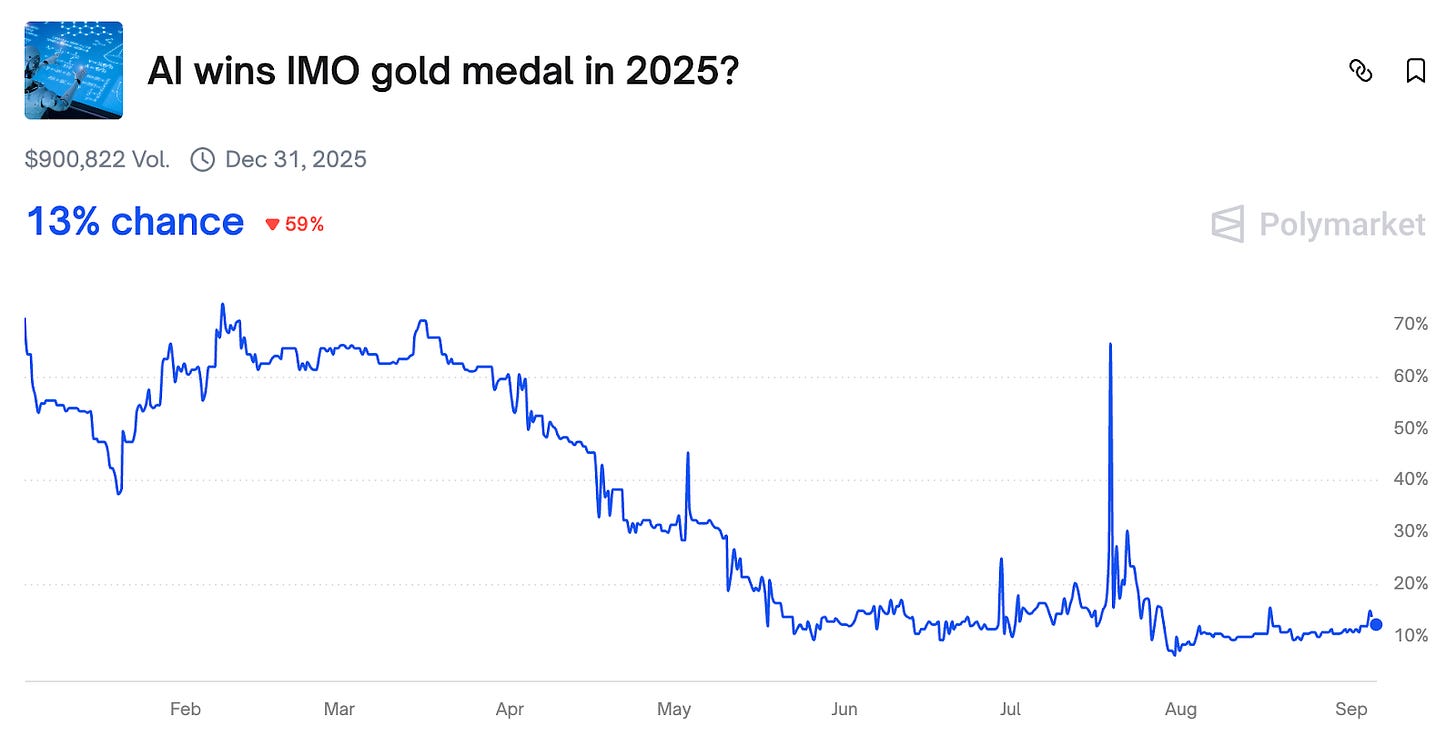

But actually I’m quite bearish on the AI wins IMO gold medal market. The key here is you have do really read the rules. I’m confident a private AI system would win, but the market resolution rules require the AI to be open source and meet specific IMO Grand Challenge requirements. It is very easy to imagine Google or OpenAI models winning the prize, but very difficult to imagine them making the model open source because the development would require tens of millions of dollars. Reading the rules with meticulous detail is always one of my favorite forecasting strategies.

Any other "nothing ever happens" takes?

Like with Iran, I think the Venezuela situation with Trump and Maduro will probably just fade from the news. Unlike Iran where there was a specific nuclear threshold and Israeli pressure, there's not some countdown clock we need to avert with Venezuela. It seems more like intimidation and gunboat diplomacy rather than something that will escalate.

Same with Ukraine. Doomberg predicted a sudden collapse in Ukraine, and I think it's just much easier to hold positions than capture new ground. There's a strong defense advantage, and the European Union will probably continue resourcing Ukraine. You'd need special evidence to suggest Russia is poised for a breakthrough, and we don't really have that.

Any final words of wisdom?

I personally love mopping up the 90% that ought to be 95%. It's slow and steady wins the race, the anti-Doomberg approach. I'll enter at 88% or 90% because I think they should be 95.

You do have the burden of being right 19 out of 20 times. But there's just a systematic bias towards the thrill of the 10-to-1 underdogs, and I love fading that.

It's the "nothing ever happens" philosophy applied systematically. Most provocative predictions don't come true, even if they make for exciting headlines.

Follow Peter Wildeford on X and Substack.

Disclaimer

Nothing in The Oracle is financial, investment, legal or any other type of professional advice. Anything provided in any newsletter is for informational purposes only and is not meant to be an endorsement of any type of activity or any particular market or product. Terms of Service on polymarket.com prohibit US persons and persons from certain other jurisdictions from using Polymarket to trade, although data and information is viewable globally.

I don't think doomberg predicted any of that as happening....he liked the long shot odds om those...likelihood of it happening very slim. However atleast 1 eu gov falling apart before end of 2026 is likely happening.

"I assumed that no one had ever won a first round by that much and then failed to clinch the second round. I was very wrong." I mean I think you were right as of May 17 2025 (with a decent sample size if you include subnational results)!!